Enterprises are collecting data at an unprecedented rate whether it is from infrastructure, internal or external applications. The latest and greatest in machine learning allows them to gather meaningful insights from that data and use it for competitive advantage.

Depending on where they are in their AI/ML journey, they might use the public cloud or – quite often for cost reasons and depending on how they are set up – build an AI/ML infrastructure on-premises leveraging existing investments.

Kubernetes has been a catalyst in building AI/ML capabilities, however DIY setting up and maintaining such as stack can be complex. As more and more Cisco Container Platform (CCP) customers are interested in machine learning, we are continuously evolving the support to enable them in their journey.

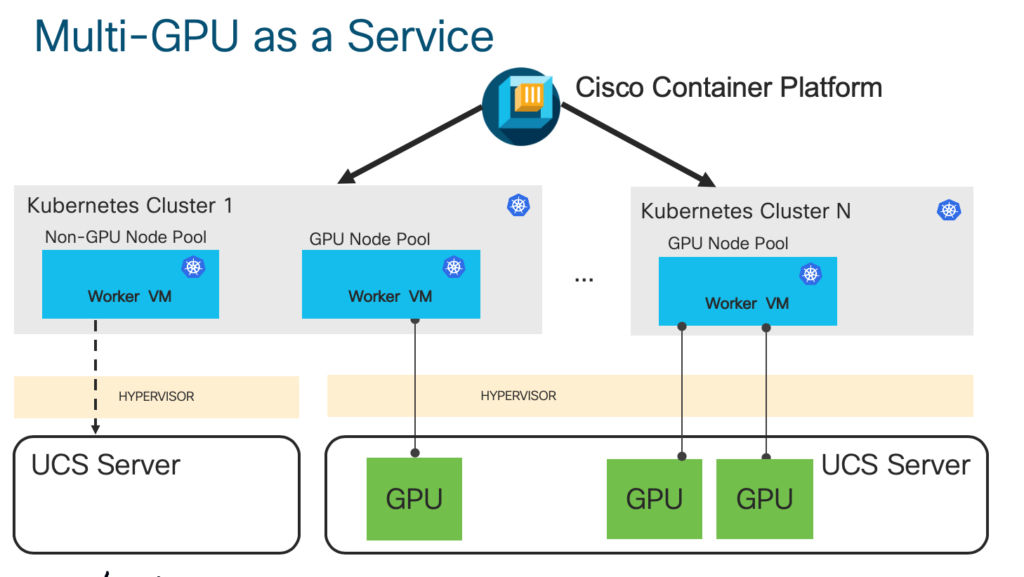

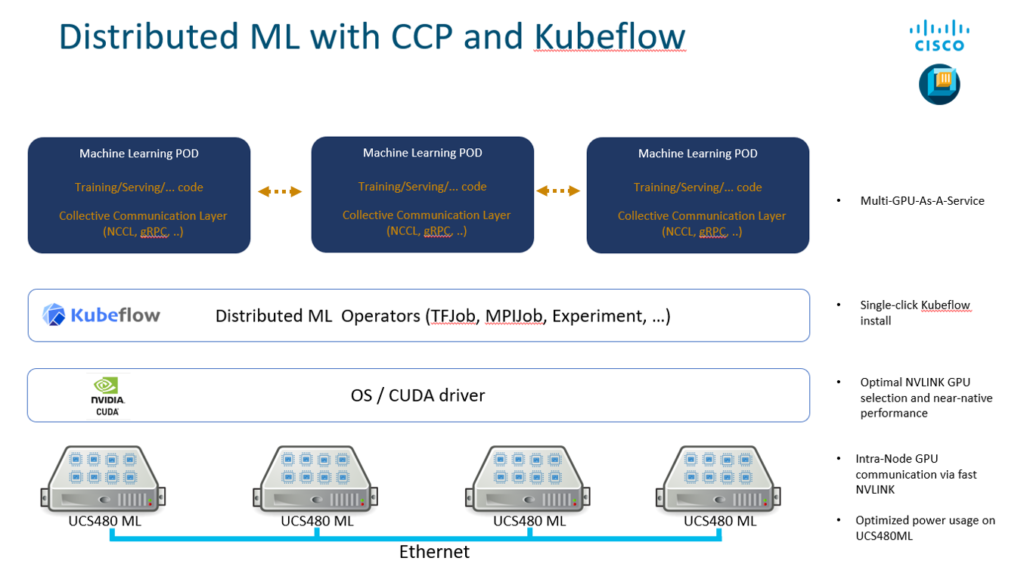

Great examples are the latest distributed machine learning capabilities with features like “multi-GPU as a Service” and Kubeflow.

Making the most out of GPUs for AI/ML

Parallel processing is at the core of distributed AI/ML development and CCP’s full-stack integration with GPUs provide exactly that. This is all about making use of GPUs from the underlying infrastructure on-demand, optimizing the allocation of bridged GPUs in the physical servers per Kubernetes worker node. This is especially important when there is a need for heavy and/or multiple jobs sent for processing.

In addition, CCP pre-installs the necessary Nvidia drivers and Cuda toolkit so that the operations teams do not need to install and manage them manually. Consequently, customers can run their machine learning containers like any container-based application and easily integrate with their existing CI/CD pipeline.

Finally, our customers are also looking for a machine learning lifecycle manager that hides the complexity of Kubernetes. Kubeflow is gaining lot of popularity as a Kubernetes machine learning lifecycle manager and CCP offers a single-click install. Stay tuned for future blog posts focusing on Kubeflow.

To learn more about building an AI/ML stack on top of Kubernetes and how you can use Cisco Container Platform to enable your data scientists and AI/ML engineers, join me at my Cisco Live! Barcelona session on Thu Jan 30th 1:30 to 2:30 pm.

Follow Meenakshi Kaushik on Twitter: @Mkaushik108.

CONNECT WITH US