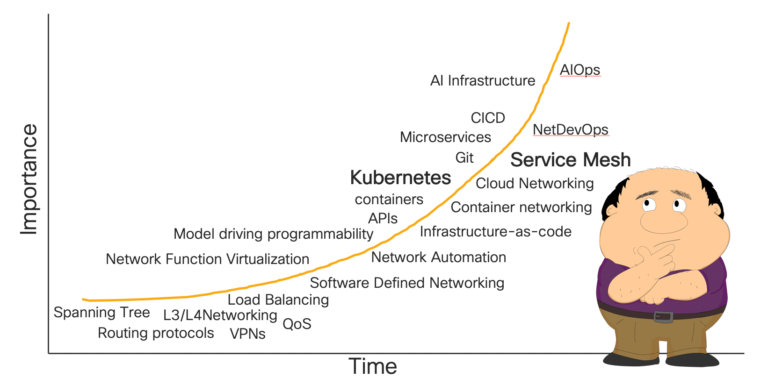

Learning never ends, and that’s never been truer for the trusty network engineer. Of late Network Engineers have been moving up the stack, changing the way we deliver network services, becoming programmatic and using new tooling.

A not so scientific graph of what network engineers need to be aware of in 2020

The driving force behind these changes is the evolution of application architectures. In the era of modular development, applications are now collections of loosely coupled microservices, independently deployable, each potentially developed and managed by a separate small team. This enables rapid and frequent change, deploying services to where it makes most sense (e.g. Data Centre, public clouds or Edge). At the same time, Kubernetes (K8s) is quickly becoming the de facto platform upon which to deploy microservices.

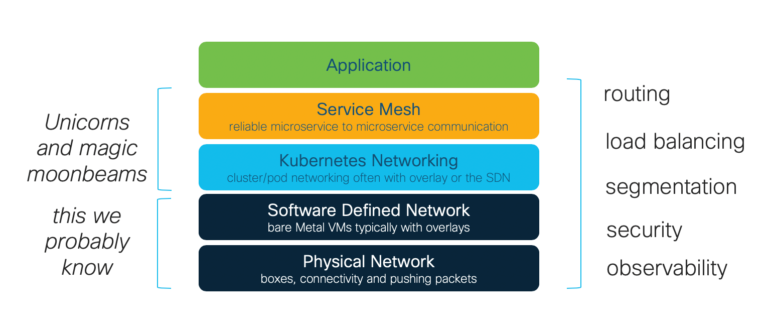

What does this mean for the networker engineer? Well, routing, load balancing and security have been the staple of many over the years. It’s stuff engineers know very well and are very good at. But these capabilities are now appearing in some new abstractions within the application delivery stack.

For example, K8s implements its own networking model to meet the specific requirements of the k8s architecture. Included in this model are network policies, routing pod to pod, node to node, in and out of clusters, security and load balancing. Many of these networking functions can be delivered within K8s via a Container Network Interface (CNI) like Nuage or Flannel. Alternatively, you could leverage a lower level networking abstraction such as the Cisco Application Centric Infrastructure (ACI), benefitting from using one common network fabric for bare metal, virtual machines and containers.

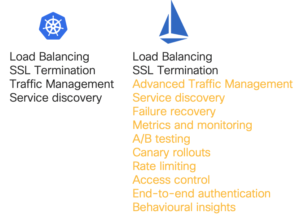

As K8s is a container orchestrator, designed for creating clusters and hosting pods, its networking model meets exactly those needs. However, K8s is not designed to solve the complexity of microservices networking. Additional developer tooling for microservices such as failure recovery, circuit breakers and end to end visibility is often embedded in code to address those aspects, adding significant development overhead.

Enter stage left service mesh.

“The term service mesh is used to describe the network of microservices that make up such applications and the interactions between them. As a service mesh grows in size and complexity, it can become harder to understand and manage. Its requirements can include discovery, load balancing, failure recovery, metrics, and monitoring. A service mesh also often has more complex operational requirements, like A/B testing, canary rollouts, rate limiting, access control, and end-to-end authentication”

Source: https://istio.io/docs/concepts/what-is-istio/

The above poses the question: is a service mesh a network layer? Well… Kind of. The service mesh ensures that communication between different services that live in containers is reliable and secure. It is implemented as its own infrastructure layer but, unlike K8s, it is aware of the application. Some of the capabilities it delivers to the application are recognisable network functions such as traffic management and load balancing, but these are executed at the microservices layer, and need that intimate knowledge of the application and its constituent services. Equally, the service mesh relies on lower level abstractions to deliver network functions as well.

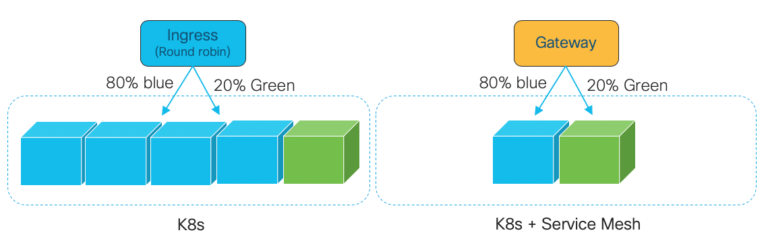

Service mesh networking vs K8s networking

To compare the capabilities of k8s and service mesh let’s look at the example of a canary deployment. The idea behind a canary deployment is that you can introduce a new version of your code into production and send a proportion of users to the new version while the rest remain on the current version. So, let’s say we send 20% of users to our v2 canary deployment and leave the other 80% on v1.

You can achieve this with k8s but requires some hand cranking. It would require you to create your new canary deployment in proportion to what already exists. For example, if you have 5 pods and want 20% to go to the V2 canary, you need 4 pods running v1 and 1 pod running V2. The Ingress load balancing will distribute load evenly across all 5 pods and you achieve your 80/20 distribution.

Canary Deployments with K8s and Service Mesh

With service mesh this is much easier. Because the service mesh is working at the microservices network layer you simply create policies to distribute traffic across your available pods. As it is application-aware, it understands which pods are V1 and which pods the V2 canaries and will distribute traffic accordingly. If you only had two pods, V1 and V2, it would still distribute the traffic with the 80/20 policy.

In terms of comparing them, we can think of as K8s provides container tooling whereas service mesh provides microservices tooling. They are not competitive. They complement each other.

Looking at the overall stack, we can see that there are now four different layers that can deliver specific networking functions – and you might need all of them.

Abstractions and more abstractions

How Does a Service Mesh Work?

There are a number of service mesh options in the market right now. Istio from Google probably gets most the headlines but there are many other credible service meshes such as Linkerd, Envoy, and Conduit.

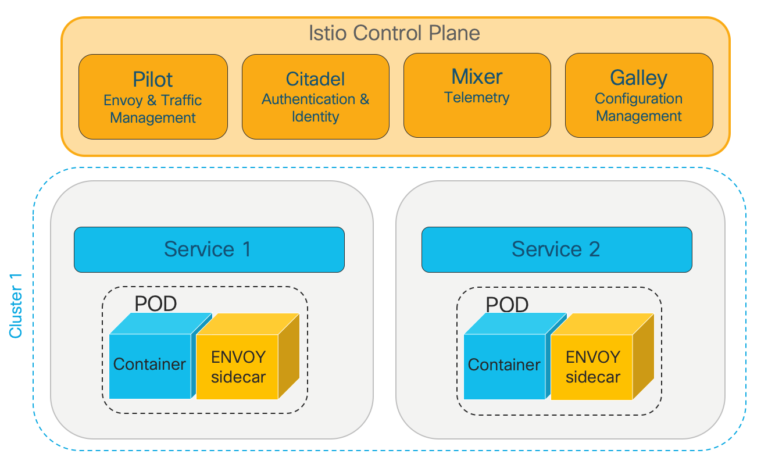

Istio Control Plane and Sidecar Proxies

Typically, a service mesh is implemented using sidecar proxies. These are just additional containers (yellow in the diagram above) that proxy all connections to the containers where our services live (blue in the diagram above). A control plane programs the sidecars with policy to determine exactly how the traffic is managed around the cluster, secures connections between containers and provides deep insights into application performance. (We will have some follow-up blog posts going under the service mesh covers in the coming weeks).

Ok. Great stuff. But what does this mean for the network engineer?

Many of the service mesh features will be familiar concepts as a network engineer. So, you can probably see why it’s important for network teams to have an understanding of what a service mesh is, and how, why and where these different capabilities are delivered in our stack. Chances are, you may know the team that is responsible for the service mesh, you may be in that team, or end up being the team that is responsible for the service mesh.

Delivering microservices works great in an ideal world of greenfields and unicorns, but the reality is that most don’t have that luxury, with microservices being deployed alongside or integrated to existing applications, data, infrastructure stacks and operational models. Even with a service mesh, delivering microservices in a hybrid fashion across your data centre and public cloud can get mighty complex. It’s imperative that network engineers understand this new service mesh abstraction, what it means to your day job, how it makes you relevant and part of the conversation, and perhaps it spells great opportunity.

If you want to learn more then there are a number of service mesh sessions at CiscoLive Barcelona.

Service Mesh for Network Engineers – DEVNET – 1697

Understanding Istio Service Mesh on Kubernetes – DEVNET-2022

DevNet Workshop: Let’s Play with Istio – DEVWKS-2814

But..why do I need a Service mesh? – BRKCLD-2429

Follow Roger Dickinson on Twitter: @DCgubbins.

An interesting article. Makes me want to into service mesh’s and K8 in general more deeply.

Tim. There is various container and K8s learning resources on DevNet (developer.cisco.com) with more coming shortly. I will also be posting a lab based on this blog in the coming weeks.